The Dark Side of Generative AI: Exaggerating Car Crash Damage for Insurance Fraud

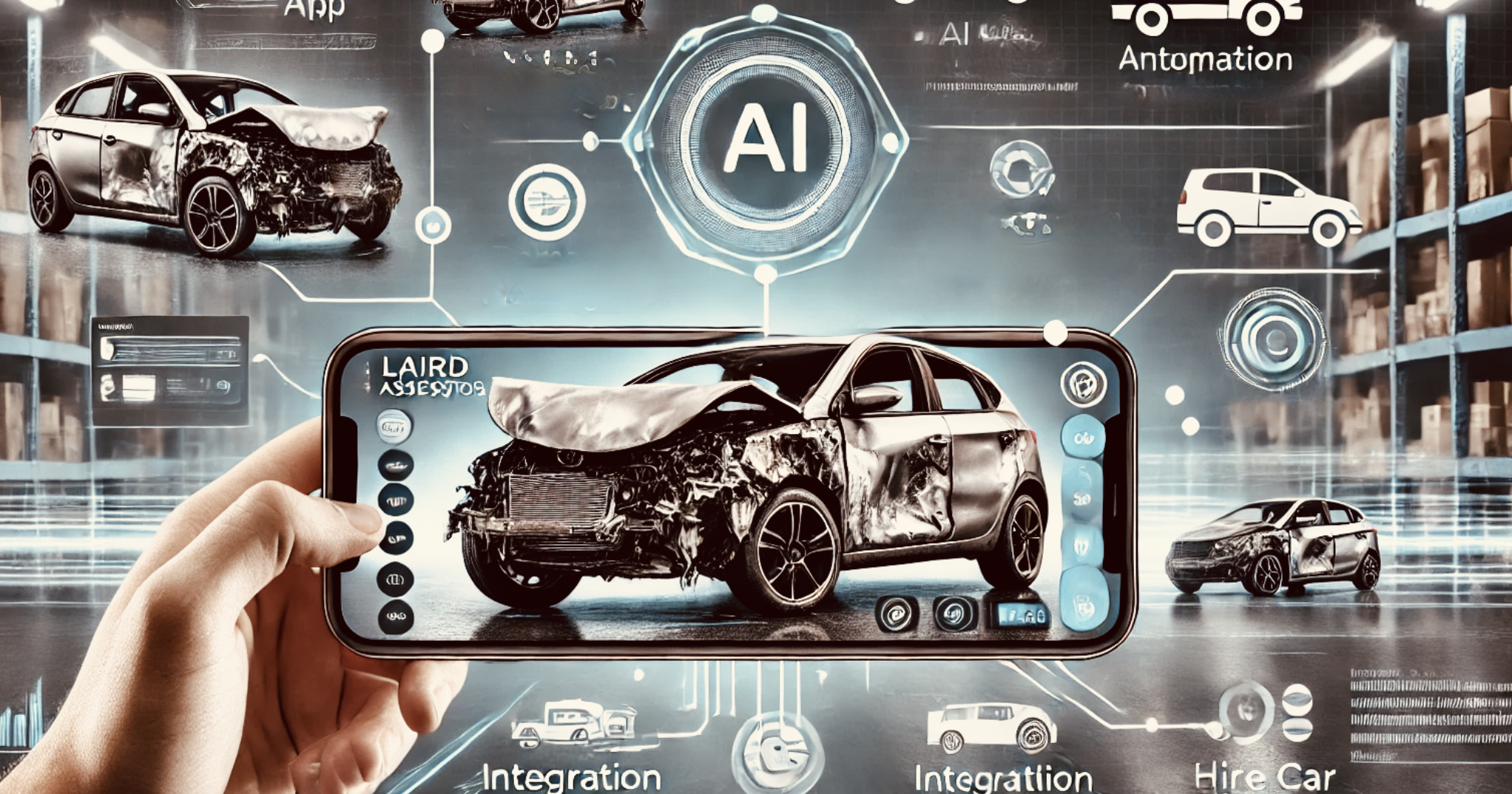

In recent years, the advancements in artificial intelligence (AI) have brought about transformative changes in various industries. One of the most profound developments has been in the field of generative AI, a subset of AI that involves creating new content, such as images, text, or audio, based on a given input. While generative AI has been celebrated for its potential to revolutionise creative industries, it also presents new challenges, particularly in the realm of insurance fraud.

The Rise of Generative AI in Image Manipulation

Generative AI has become increasingly adept at manipulating images, with the ability to generate realistic and convincing alterations that are difficult to detect with the naked eye. This technology has been applied in numerous fields, from entertainment to marketing, but it also holds the potential for more nefarious uses, particularly in the context of car insurance claims.

By using generative AI, individuals can manipulate images of car crash damage to exaggerate the extent of the damage, making the vehicle appear far more damaged than it actually is. In some cases the car has not sustained any damage at all. This fraudulent practice can lead to inflated insurance claims, costing insurance companies millions of pounds a year and, ultimately, driving up premiums for honest policyholders.

How Generative AI Exaggerates Damage

The process of exaggerating car damage using generative AI typically involves several steps:

- Image Input: A legitimate image of a car accident is provided as input to the AI system.

- Damage Amplification: The AI then analyzes the existing damage and generates additional damage, seamlessly integrating it into the image. This can include adding more dents, cracks, broken parts, or even altering the structure of the car to make it look as if it has suffered a more severe impact.

- Realism Enhancement: To make the manipulated image convincing, generative AI applies realistic textures, shadows, and reflections, ensuring that the fabricated damage appears genuine.

- Submission for Claim: The manipulated image is then submitted to the insurance company as evidence of the supposed damage, often accompanied by a falsified narrative to support the claim.

The Impact on the Insurance Industry

The implications of this type of fraud are significant. Insurance companies rely heavily on photographic evidence to assess the validity of claims. When images are manipulated with generative AI, it becomes increasingly difficult for our human assessors and even traditional image analysis tools to distinguish between legitimate and fraudulent claims. This leads to increased payouts for fraudulent claims, contributing to higher overall costs for the industry.

In response, insurance companies may be forced to raise premiums for all customers to offset these losses, creating a financial burden for honest policyholders. Furthermore, the prevalence of such fraud can erode trust in the claims process, making it more challenging for genuine claimants to receive fair compensation.

Countering Generative AI Fraud

As the threat of generative AI-driven fraud grows, the insurance and assessing industries must adopt new strategies to combat it. Several approaches can be taken:

- Advanced AI Detection Systems: Just as generative AI can be used to manipulate images, it can also be harnessed to detect such manipulations. By training AI models to identify inconsistencies in images, assessors & insurers can develop tools capable of flagging suspicious claims for further investigation.

- Forensic Analysis: Enhancing forensic analysis techniques to include AI-generated image detection can help in identifying manipulated images. This might involve analyzing pixel patterns, checking for inconsistencies in shadows and reflections, or comparing the image with a database of known damages.

- Blockchain for Image Verification: Utilizing blockchain technology to create a secure, tamper-proof record of images at the time of the accident can prevent post-incident manipulation. By storing original images on a blockchain, insurance companies can ensure that the images submitted with claims are identical to those captured at the scene.

- Education and Awareness: Insurance companies should also focus on educating their employees and customers about the risks of generative AI fraud. By raising awareness, they can encourage vigilance and early detection of potential fraud attempts.

- Regulatory Measures: Governments and regulatory bodies can play a crucial role by establishing guidelines and standards for the use of AI in insurance. This could include mandating the use of certain detection technologies or requiring companies to verify the authenticity of images submitted with claims.

Generative AI offers incredible possibilities, but its misuse in exaggerating car crash damage for insurance fraud is a growing concern. As the technology continues to evolve, so too must the strategies to counteract its negative impacts. By investing in advanced detection systems, enhancing forensic capabilities, and fostering collaboration between industry stakeholders, the insurance sector can stay ahead of this emerging threat and protect both companies and customers from the financial consequences of AI-driven fraud.